Building the Bridge: Running Javascript Modules from Dart

Chima PreciousSoftware Engineer @Globe

Chima PreciousSoftware Engineer @Globe

When we started exploring Dart for our backend and tooling needs, we knew we were betting on a relatively young ecosystem. Dart is fast, type-safe, and has solid concurrency support, but it’s missing one thing that JavaScript has in spades: an enormous, battle-tested module ecosystem.

We didn’t want to rebuild libraries like AI SDK, or database drivers from scratch. Nor did we want to force Dart developers to drop into another ecosystem every time they needed a mature SDK.

So we built a bridge. A seamless, low-latency, embeddable way to run TypeScript/JavaScript modules inside Dart, as if they were native Dart code.

This is the story of how we did it, and what we learned along the way.

The Problem: Dart is Good, but Early

Dart has a lot going for it; great concurrency with isolates, good performance, and strong cross-platform tooling as seen in projects like Flutter. But when you look at the state of SDKs and libraries in the Dart ecosystem, especially around modern AI, cloud APIs, and data processing, you’ll find a lot of blanks.

Want to use the official OpenAI SDK? You’re out of luck. Need to use a modern streaming tokenizer or a new S3 upload API? It’s likely written for Node.js or some other ecosystem.

That meant either:

- Porting JS SDKs to Dart manually (slow, costly, error-prone)

- Writing wrappers in another language and RPCing from Dart (complex)

- Or… finding a way to run JavaScript directly from Dart.

The Spark: What If Dart Could Run JavaScript Modules?

This wasn’t just about running a few JS snippets with dart:js or a headless engine. We wanted:

- Full support for Node.js modules, even ESM and CommonJS hybrids.

- Native-like performance and low latency.

- A clean Dart API that feels like using any other Dart SDK.

- Streaming, callback support, and complex data structures.

It sounded like a moonshot, until we realized we didn’t need to build a JS engine from scratch.

Enter Deno, Rust, and V8

Instead of embedding Node.js or hacking together a JS interpreter, we turned to Deno.

Deno’s runtime, written in Rust, offers deep bindings to V8, module loading, and execution control, all the right primitives for hosting JS modules in a controlled environment.

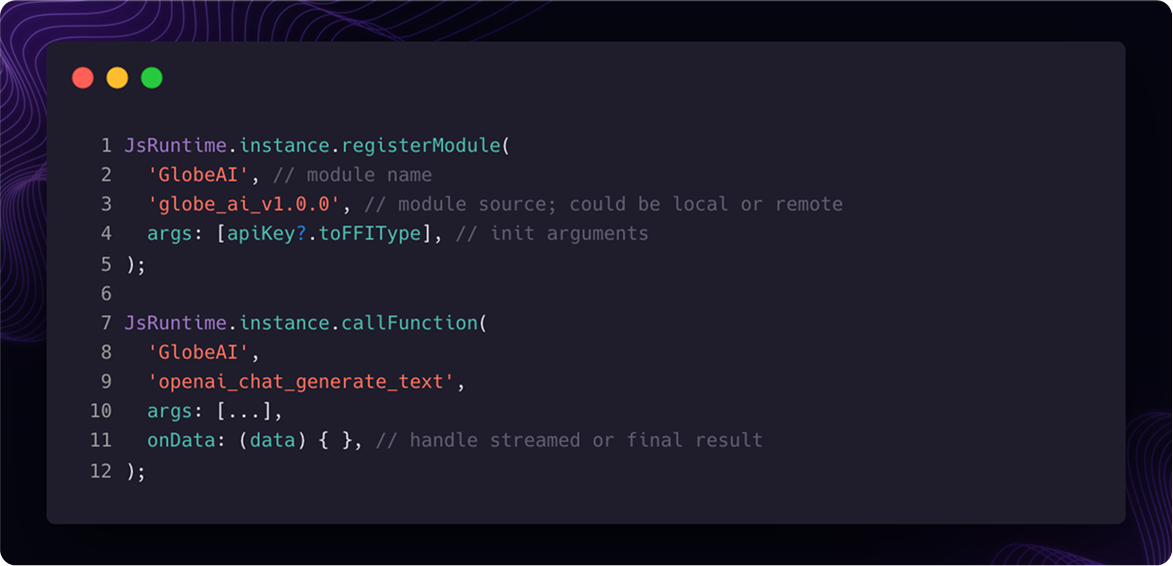

So we built a portable JS runtime using Deno’s tooling and V8 glue code-compiled into a native binary we could embed. On the Dart side, we used FFI to interact with this native code, setting up a runtime entry point that could load modules, pass function arguments, and stream responses back into Dart.

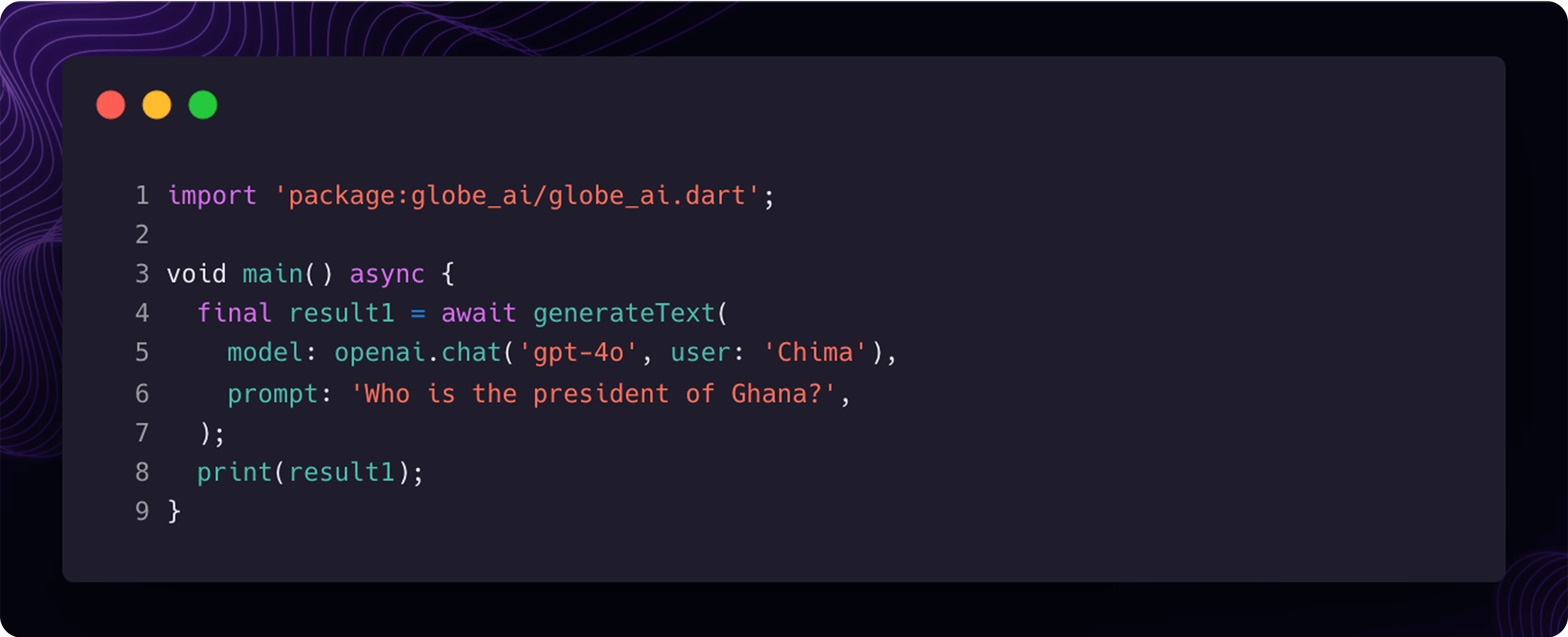

Under the hood, this Dart code is calling JavaScript’s ai.generateText(...), through V8, Rust, and a streaming FFI interface, without any RPC servers, temporary files, or glue scripts.

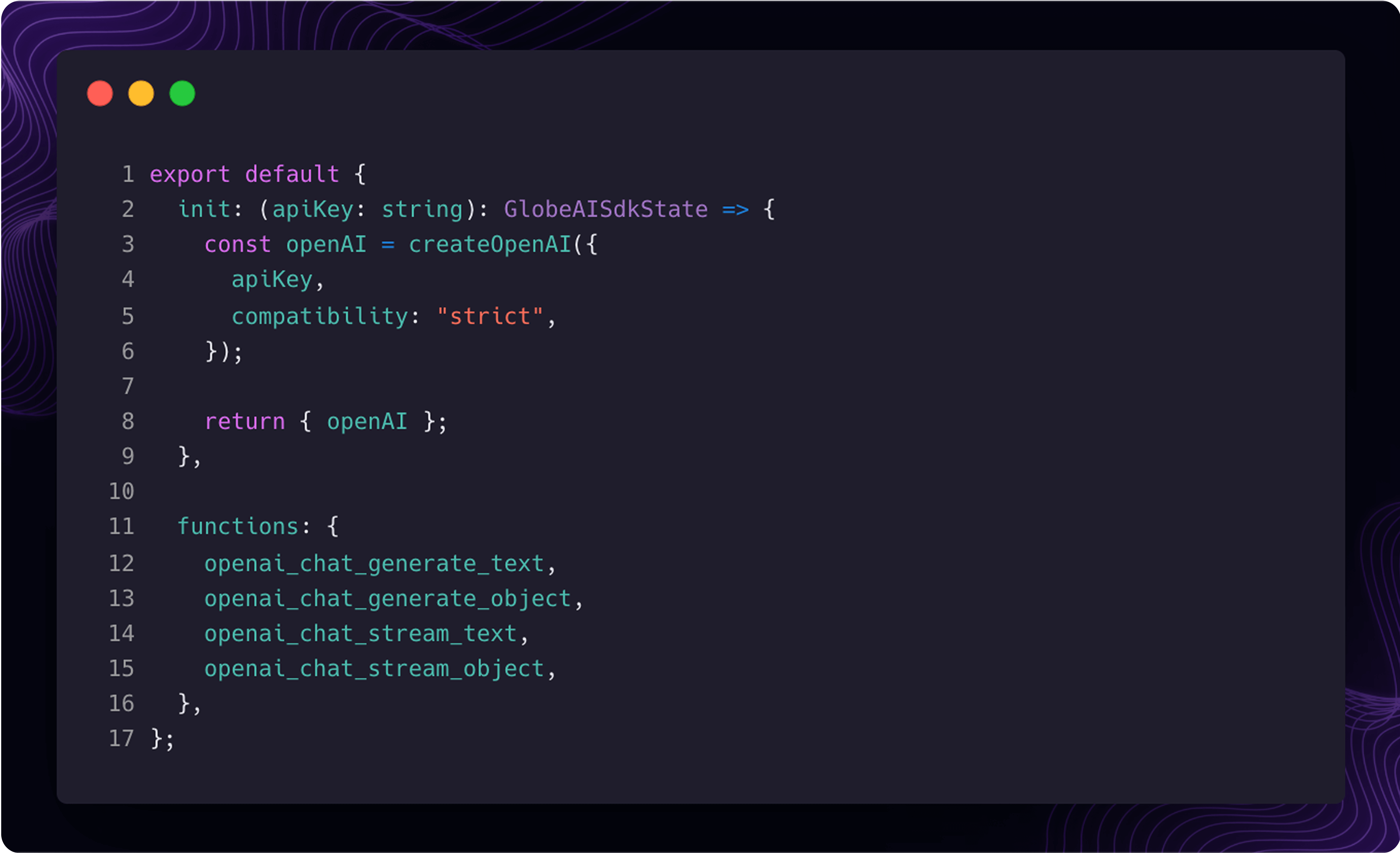

The Interface: How It Works

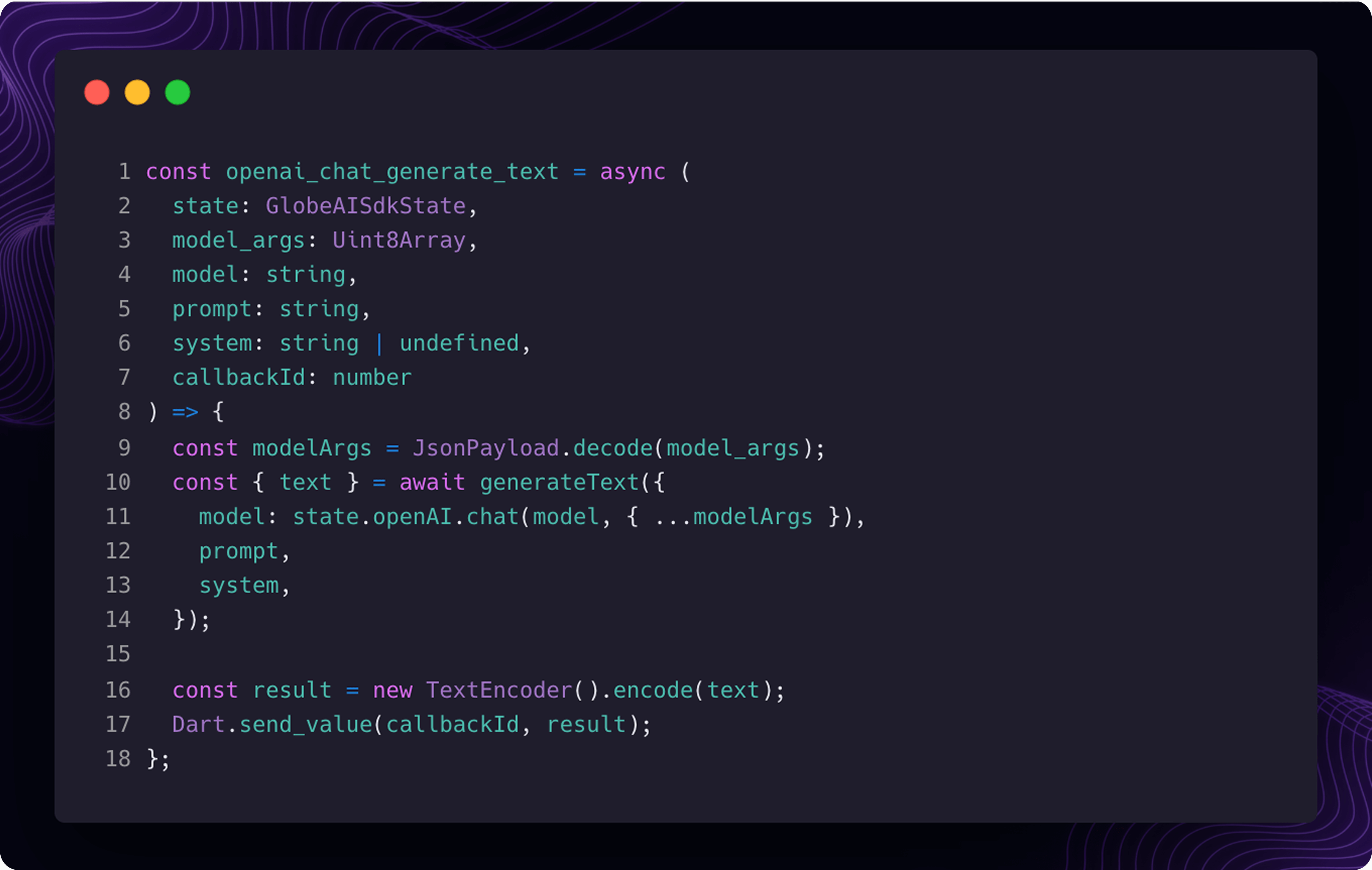

On the Dart side, we expose a simple API via a class called JsRuntime. You can register modules, call functions by name, and receive responses asynchronously:

You write JavaScript like JavaScript. Dart sees it as a native SDK.

Streams, Callbacks, and Serialization

Supporting real-time streaming APIs, like OpenAI’s chat streaming endpoint, meant we had to think carefully about how data flowed between Dart and JavaScript.

At first, we used MessagePack for serialization. It was compact, fast, and worked well for basic data passing. But as our use cases grew more complex, with deeply nested types, optional fields, and evolving schemas, it became harder to enforce consistency between Dart and JavaScript.

That’s when we added Protobufs into the mix.

Protobuf gave us two huge benefits:

- A strict, shared contract between Dart and TypeScript via generated types.

- Confidence in compatibility, especially for streams of partial updates, deltas, or error objects.

This let us define types like ChatCompletion, ChatCompletionChunk, and Usage once and generate matching classes in both runtimes, removing ambiguity and reducing runtime bugs.

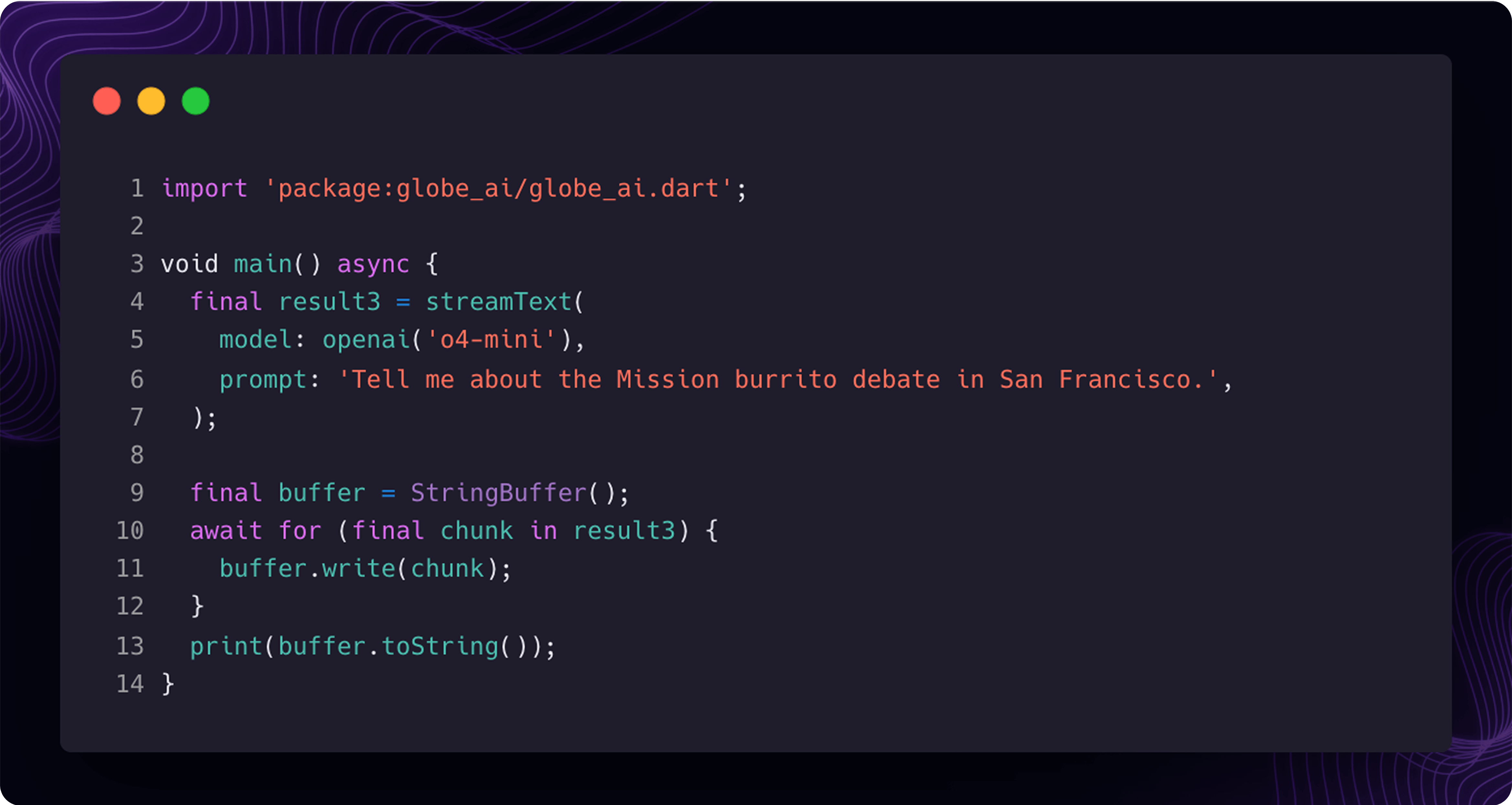

It also made streaming dead simple:

Each streamed chunk is deserialized into a typed Dart object, just as it would be in a fully native SDK. And since everything travels through our Rust layer over FFI, it’s fast and consistent, even for high-frequency streams or large payloads.

Serialization may have started as a detail, but in the end, it became one of the core reasons this bridge feels reliable to use.

What We Gained

- Time saved: We do not have to rewrite SDKs like OpenAI or Cohere in Dart.

- Future-proofing: As the JS ecosystem evolves, we can adopt new tools immediately.

- Consistency: Same interface across Dart and JS, no glue code divergence.

- Performance: Calls into JavaScript happen within the same process, avoiding IPC bottlenecks.

We’re continuing to refine the runtime, improve stream handling, and possibly explore hot-reloading for typescript modules during development.

If Dart is your core language but you miss the maturity of Node & JavaScript libraries, this bridge might be the thing you didn’t know you needed.

🔗 Explore the tools:

- 🧠 globe_ai: Dart package using the bridge to run OpenAI-compatible LLMs.

- ⚙️ globe_runtime: The embedded V8 + TypeScript runtime behind the bridge.

Have questions or want to try it out? We’re open to collaborators, feedback, and ideas. Join our Discord to chat.